On BSV Scalability

You may be surprised that I'm writing about Bitcoin Satoshi Vision, a fork of a fork of Bitcoin that's spearheaded by a serial con man and his patron, a fellow who spent 5 years on ICE's most wanted list. I think it's interesting to view Bitcoin forks as "alternative reality" experiments - we can see what might have happened if the ecosystem chose a different direction for development.

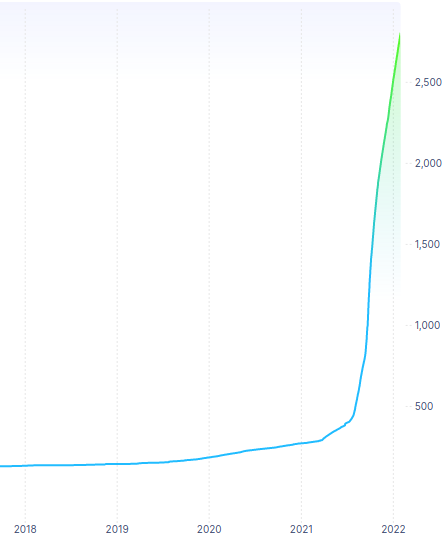

Since the fork wars of 2017 / 2018, I haven't spent much time researching these networks because they haven't been interesting to me. BCH and BSV both claimed to be seeking massive on-chain scalability, but their blocks have been far smaller than Bitcoin blocks since the fork! Until recently... when something changed with BSV and their block sizes surged in the summer of 2021 to the point that the total blockchain size is now 3 terabytes - 8X the size of Bitcoin's blockchain.

As such, it's finally worth taking a look at BSV to see how their node software is holding up under the stress.

Adventures in Running a BSV Node

I ran my tests on my usual benchmark machine against bitcoin sv 1.0.10. This machine only has a 1 terabyte hard drive, thus I'm configuring the node to prune data older than the most recent 400 gigabytes. However, the first time I tried to run the node it immediately halted with an error that I was missing configuration parameters.

Mandatory consensus parameter is not set. In order to start bitcoind you must set the following consensus parameters: "excessiveblocksize" and "maxstackmemoryusageconsensus". In order to start bitcoind with no limits you can set both of these parameters to 0 however it is strongly recommended to ensure you understand the implications of this setting.

I can't proceed without pointing out the absurdity of having node operators manually set consensus parameters - it's basically asking to create consensus failures. If you were around during the Block Size Debate and subsequent Fork Wars, you may recognize the phrase "excessiveblocksize" - it's from a concept created by Bitcoin Unlimited, a Bitcoin Cash software project. Much ink has been written on the ridiculousness of this concept.

Naturally, BSV appears to have taken it a step further by adding yet another manually configured parameter for the stack memory limit. So what does my bitcoin.conf look like for this test?

assumevalid=0

dbcache=24000

excessiveblocksize=4000000000

maxstackmemoryusageconsensus=100000000

prune=400000

On my first attempt, the BSV node synced to height 528519 and crashed due to running out of disk space with 700GB of blocks stored. What? I told it to only keep 400 GB stored...

Next I changed prune from 400 GB to 40 GB and tried again, but the node still crashed after running out of disk space. The same thing happened when I tried setting prune to a mere 4 GB. Clearly, something is amiss! I've never seen a node fail to respect its prune settings before.

For my fourth syncing attempt I decided to pay a bit more attention and run some queries against the node to try to figure out what was going wrong. When I saw that my bitcoin data directory had grown to nearly 700 GB I noted that the the node's chain tip was only at block 444,000...

$ ./bitcoin-cli getinfo

{

"version": 101001000,

"protocolversion": 70016,

"blocks": 444690,

"timeoffset": 0,

"connections": 8,

"proxy": "",

"difficulty": 310153855703.4333,

"testnet": false,

"stn": false,

"relayfee": 0.00000250,

"errors": "Warning: The network does not appear to fully agree! Some miners appear to be experiencing issues. A large valid fork has been detected. We are in the startup process (e.g. Initial block download or reindex), this might be reason for disagreement.",

"maxblocksize": 4000000000,

"maxminedblocksize": 128000000,

"maxstackmemoryusagepolicy": 100000000,

"maxstackmemoryusageconsensus": 100000000

}

And oddly, several peers had blocks in flight (being downloaded by my node) in the 700,000 range...

$ ./bitcoin-cli getpeerinfo

[

{

"id": 0,

"version": 70016,

"subver": "/Bitcoin SV:1.0.10/",

"startingheight": 724807,

"synced_headers": 724851,

"synced_blocks": 702745,

"inflight": [

703005,

703006,

703007,

703008,

703009,

703038,

703090,

703091,

703092,

703093,

703094,

703095,

703096,

703118,

703119

]

}

and the node was seeing multiple chain tips...

$ ./bitcoin-cli getchaintips

[

{

"height": 724851,

"hash": "000000000000000007140a4554c6136e3284be8a14b71dcc3ec20c65a6670aa0",

"branchlen": 279763,

"status": "headers-only"

},

{

"height": 724835,

"hash": "0000000000000000052c393c19802ab687c38e22332ce918d14e9d4fcd142cfc",

"branchlen": 279747,

"status": "headers-only"

},

{

"height": 592656,

"hash": "00000000000000000cd2c787d48b7e71eedf0725888a5daae9655b8993ddee2e",

"branchlen": 147568,

"status": "valid-headers"

},

{

"height": 445088,

"hash": "000000000000000000d92f75a25752aff14f38cbea150b44a91f651f833660f1",

"branchlen": 0,

"status": "active"

}

]

Inspecting the block files on disk it looks like some WERE deleted; the oldest one was blk00725.dat.

At this point I tried stopping the node and restarting it. I noticed that my node was no longer downloading more blocks, but was just spinning the CPU to validate the blocks that were already downloaded.

Eventually once it caught up processing all the downloaded blocks, it started pruning again and I noticed the disk usage was staying around 400 GB.

TL;DR - there appears to be a bug in BSV's syncing logic that causes it to not respect the moving window for block downloads. This bug is probably somewhere in net_processing.cpp. Basically, the node will keep downloading blocks without regard for what block height has currently been validated. Because the node can't prune data until after it has been validated, the disk keeps filling up until it runs out of space and the node crashes. I figure I'm the first person to notice this because nobody bothers to run BSV nodes.

Node Sync Performance Results

The one positive aspect of BSV node syncing is that I got a consistent download speed of 50 MB/s to 80 MB/s. Why? Probably because all of the reachable BSV full nodes are running in data centers on gigabit fiber.

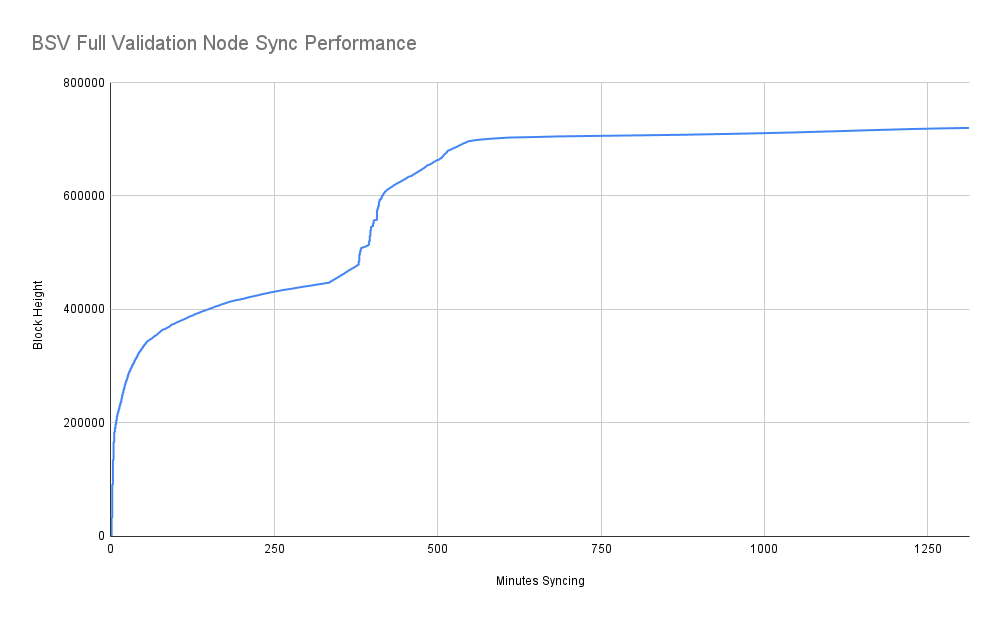

A full validation sync of Bitcoin Core to block 722,000 on my benchmark machine takes 396 minutes while a full validation sync of BSV to height 720,000 takes 1,313 minutes. So if BSV's blockchain is 8X larger but only takes 3.3X longer to validate, does this mean that BSV has achieved some respectful performance improvements? You can find my raw sync performance data here.

We can see that the performance curve follows the same fairly smooth path that we see with Bitcoin as adoption increases over the years, until block 478,558 when BCH forked away from Bitcoin and blocks go from 1 MB to empty. Then when BSV forked away from BCH at block 556,766 we can see that another kill-off of adoption caused another drop in transaction volume, making syncing even faster due to there being less data to validate. Then, nearly a year later (50,000 blocks) we see some activity starting to pick up until it really starts taking off around block 700,000 in summer of 2021 and syncing performance drops significantly.

If the worst case of average weekly activity seen thus far continues, every week of blocks will add another hour of validation time to sync - 2 days of syncing per year of blockchain time. Not bad, right?

However, I don't think we can extrapolate future scalability so simply. For example, I can see from my logs that validating the 1 GB block at height 699154 took 10 seconds. If every block was of a similar nature it would add 3 hours of validation time per week of blockchain - 6 days of sync time per year of blockchain time. But wait, even these numbers are highly optimistic! To understand why, we must dig deeper.

BSV Transaction Characteristics

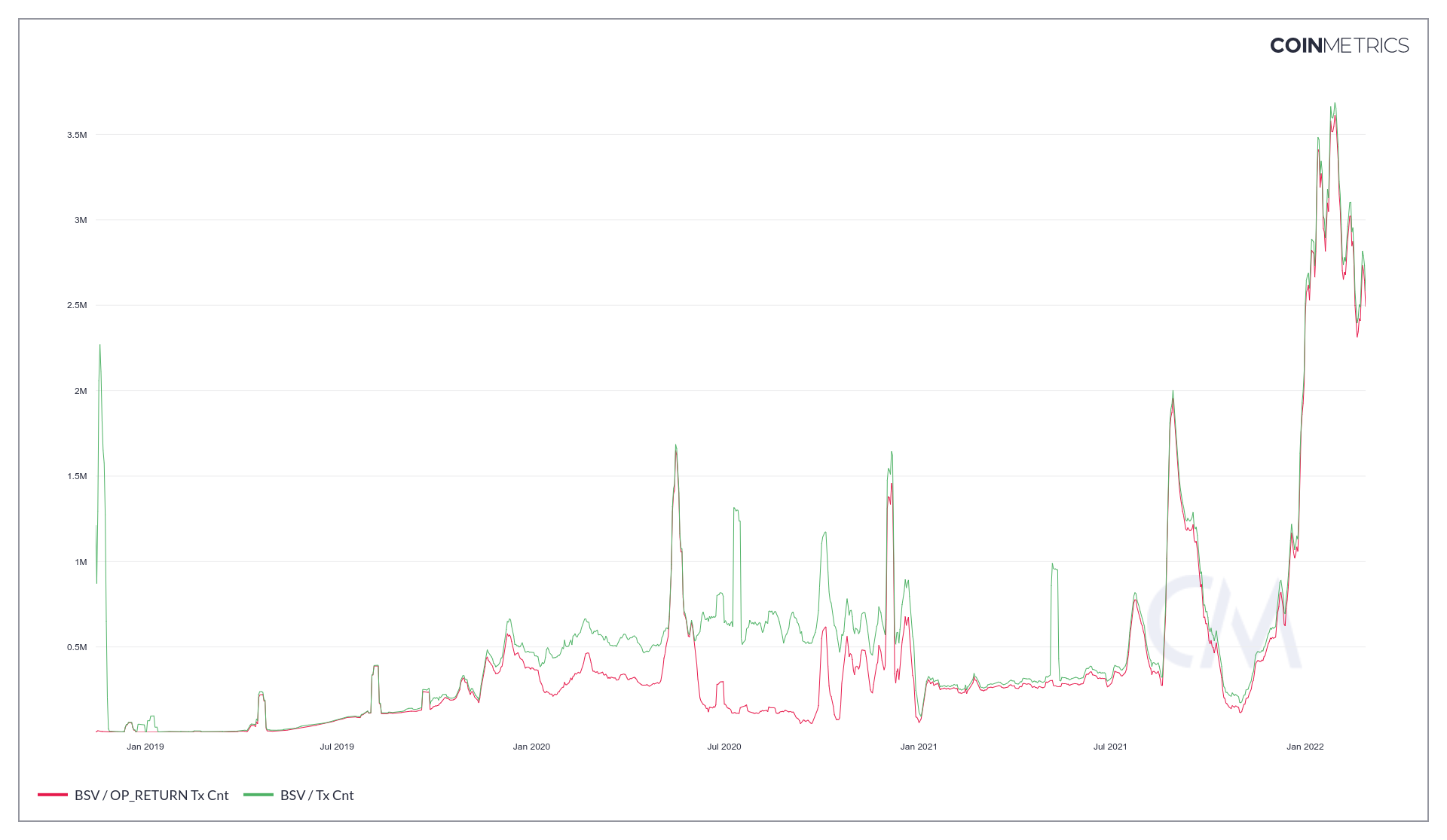

There are far more variables that affect validation resource requirements than merely the data size of a transaction / block. The vast majority of a node's computational resources are spent validating that transaction inputs are valid to ensure that no double-spending has occurred and no money has been created unexpectedly. Why am I pointing this out? Because the vast majority of BSV block space is not taken up by data that actually gets validated.

The 1 GB block referenced earlier has 14,566 transaction inputs. For context, the average 1.5 MB Bitcoin block has 7,000 transaction inputs. If a BSV block was full of organic retail-like transactions then we would expect a 1 GB block to be comprised of around 4,500,000 transaction inputs and require over 600X as much resources to validate. We can also get a data point from block 635141 which is 370MB and has 1,498,816 transaction inputs. This block took my benchmark machine over 13 minutes to validate. As such, it's reasonable to expect a 1 GB block with 4,500,000 inputs to take at least 36 minutes for my machine to validate. And of course, when it takes more than 10 minutes to validate a block you're gonna have a bad time...

Why are BSV transactions taking up so much block space? Because they're being used for data storage via OP_RETURN! What is OP_RETURN? It's is a script opcode used to mark a transaction output as invalid the data in the output does not get validated in any way other than to ensure it doesn't exceed 80 bytes. From Bitcoin Core release 0.9.0:

This change is not an endorsement of storing data in the blockchain. OP_RETURN creates a provably-prunable output, to avoid data storage schemes – some of which were already deployed – that were storing arbitrary data such as images as forever-unspendable TX outputs, bloating bitcoin's UTXO database.

Storing arbitrary data in the blockchain is still a bad idea; it is less costly and far more efficient to store large amounts of data elsewhere.

We can see here that BSV completely removed the "max size" check for "data carrier" AKA OP_RETURN transaction outputs whereas Bitcoin Core nodes reject transactions with OP_RETURN outputs greater than 80 bytes.. The following chart is pretty amazing, as it shows that for the vast majority of BSV's existence, 95% or more of the transactions on the network are being used not for payments / value transfer, but for data storage. We can be sure that this is the case because OP_RETURN outputs are unspendable - any value that gets sent to them is burned (lost forever.)

OK, so this revelation wasn't 100% surprising because we already knew that was the case back in 2019...

BSV transaction volume is now higher than BCH transaction volume butttttt 94% of BSV transactions are from a weather app. H/T @coinmetrics https://t.co/TbXyxYnP4P pic.twitter.com/s6r1lqQNym

— Jameson Lopp (@lopp) July 16, 2019

Though a few things have changed - WeatherSV seems to have stopped publishing weather data to the blockchain. I guess they didn't have a profitable business model.

However, there are other services that let you post tons of data to the blockchain, like Bitcoin Files! That's how you end up with stuff like this:

The biggest ~2G block is full of exactly SAME image. Just click the block link https://t.co/azBvbT2wH9 and compare any tx data text. Decode it and it's a dog.

— Bsvdata (@bsvdata) August 18, 2021

Astonished, who would want to fill a transparent blockchain with junks and pretending to be real? Make no sense. pic.twitter.com/rNFPpc6f3e

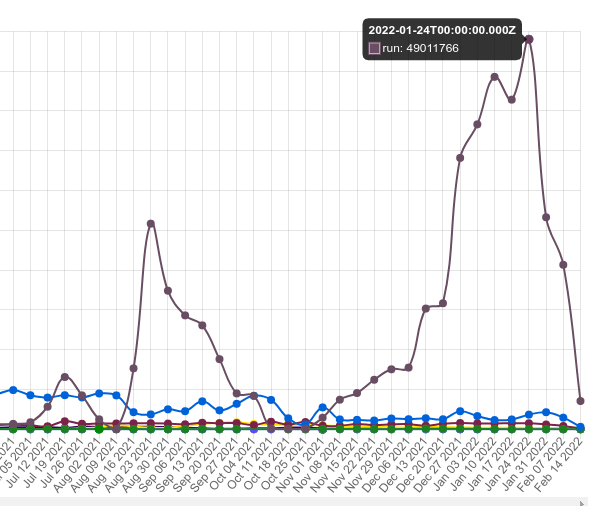

We can see from whatsonchain's stats that the most common type of OP_RETURN transaction for the past 6 months has been "Run" which recently peaked at nearly 50M transactions per week.

Run appears to be a smart contracting language that lets you create tokens, contracts, and other digital assets. And it appears that it lets you write the code for you assets and publish it directly to the blockchain via OP_RETURN.

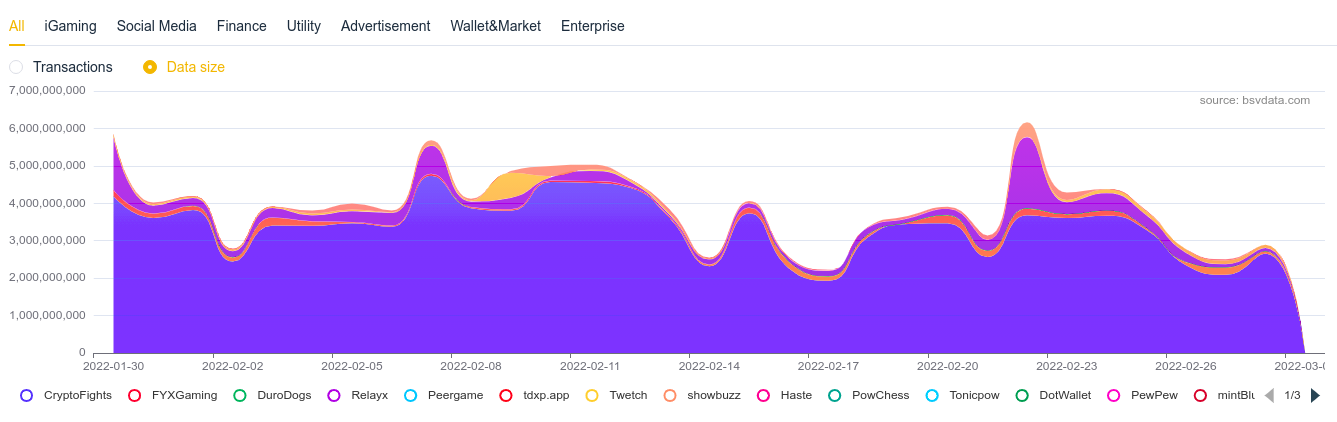

According to BSVData, the application that is adding the most data to the blockchain lately (about 3 GB per day) is CryptoFights and it's one of several that uses the Run protocol.

Smoke and Mirrors

This is all part of the ruse. The BSV marketing machine would have you believe that their software is highly performant and can process sufficient on-chain transaction volume that could handle the entire world's transacting needs. BSV leadership claim they are processing 1,000X as much data as Bitcoin, but that's not impressive in the slightest because the data in these blocks is being generated in such a way as to avoid requiring validation.

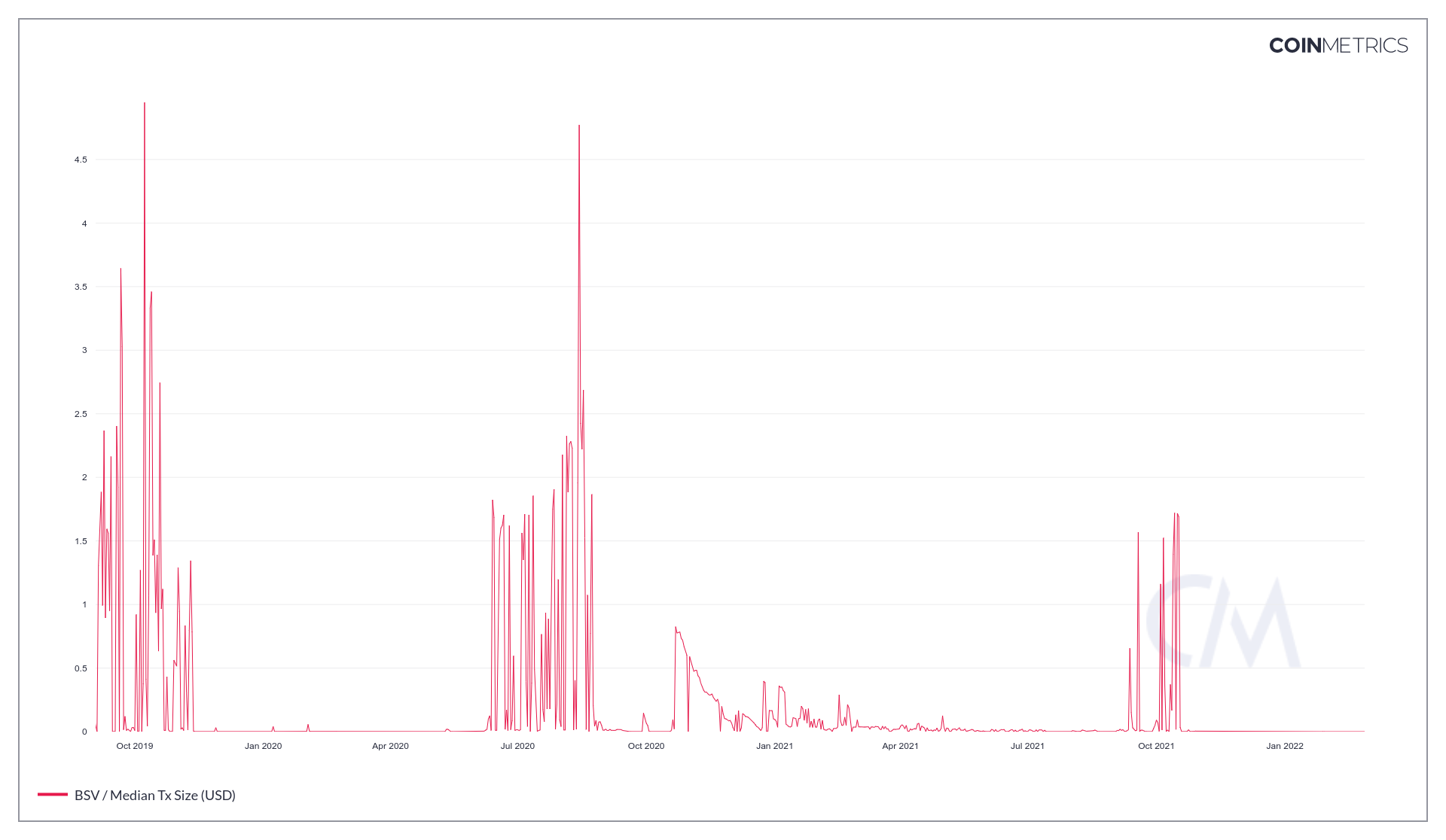

We can see that one fun result of these shenanigans is that the median value transfer amount on BSV these days is effectively 0.

There are no technical improvements that make BSV more performant node software; if the network was actually tasked with handling the transaction load they claim to be able to handle, it would buckle under the computational requirements. Recall that a 1 GB block comprised of value-transfer transactions (rather than data storage transactions) would over 3X as long to validate as it does to even create the block. That means that 1 year's worth of such blocks would take 3+ years to validate on my benchmark machine. It's simply unsustainable.

BSV proponents will claim that the Teranode project is where the scalability improvements are happening. OK; show me the code and I'll test it! Craig Wright has been promising Teranode for 4 years. Steve Shadders promised Teranode on mainnet a year ago. I am not impressed by vaporware. Even if Teranode ever gets released, it's only going to increase the total resource requirements to run a BSV node and thus further shrink and centralize the network.

Classic Grade A BS from CSW. Though it's amusingly accurate in that he's right about his "bit con." The idea that this network could support a bunch of SPV clients is ludicrous. If there are only 27 reachable nodes then that means there are a total of ~3,000 connection slots available for SPV clients. For a deeper dive into the issue I'm referencing, see my previous post.

The Future of BSV

Is this form of "scaling" sustainable?

0.000000003 BSV per byte

X 1000000000 bytes per gigabyte

X $100 per BSV

= $300 in transaction fees paid per GB of data added to the BSV blockchain.

If the max block size is 4 GB and all blocks are full then the entire network of BSV miners (which are mostly Calvin Ayre operations) could at best expect to earn $173,000 per day in fees. And it wouldn't surprise me if the entities paying these transactions fees are themselves funded by Ayre, thus essentially moving his money from one hand to another.

Meanwhile, the Bitcoin network over the past year has paid out anywhere from $500,000 to $15,000,000 in transaction fees per day with 0.05% of the data resource requirements. That's between 3X and 87X the value per byte. BSV proponents will surely spin this as the network being cheap or affordable for anyone to use, but there's no way to get around the fact that this is a problem of incentives - the network must be able to pay for its own security.

The cost to 51% attack the BSV blockchain at time of writing is under $3,000 an hour. BSV has already suffered such attacks, causing further loss of confidence in the network's security, and was the final straw that pushed some exchanges to delist it for their own protection.

The result of BSV being delisted from most major exchanges has dried up its liquidity, making it even harder for the network to pay for its own security via the block subsidy alone.

One thing I noticed while researching BSV for this report was that coin.dance shows stats for the other major bitcoin forks but not for BSV. Presumably because they simply can't handle the data volume.... it looks like they gave up in April of 2021 when the page changed to display this notice.

I'm seeing similar issues on other services that compile stats for a variety of blockchains. Take coinstats.app, for example, which shows this for BSV on-chain metrics.

Imposing high costs on services that need to run nodes is not sustainable.

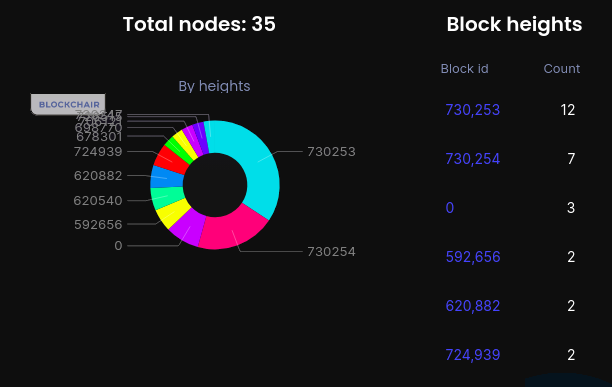

At time of writing there are only 35 reachable nodes and only 19 of those are within 5,000 blocks of chain tip. Not the sign of a particularly healthy, decentralized network.

And finally...

The trend is pretty clear - BSV hasn't even been able to hold 1% of Bitcoin's value and it's steadily approaching zero. I expect Calvin & Craig are going to have a tough time squeezing much more blood from this stone.