How to Archive & Transcribe YouTube Videos in Bulk

Recently I decided to improve my sovereignty in a new way - by reducing my reliance upon third party hosting of the audio and video content I've produced over the years.

I grow weary of people informing me that YouTube videos I'm in have been blackholed.

— Jameson Lopp (@lopp) November 5, 2022

Going forward, I will archive everything myself. Turns out YouTube doesn't like it when you download over 10GB in a day... thankfully IP addresses are cheap!

The following guide will walk you through the steps I took to accomplish this. It's a bunch of pretty technical Linux command line usage, so if you aren't fairly motivated to solve this problem for yourself, the rest of this article will be pretty boring.

The summary, for those who don't need to know the details, is that we'll leverage 2 tools along with some light scripting in order to make them process large batches of files with a single command. The first tool will download the video files from YouTube while the second will convert their audio into text.

On to the nitty gritty!

Backing Up YouTube Videos

We'll be using yt-dlp to download the videos in bulk. If you're a pip user, I'd just "pip install yt-dlp" but you can also simply download the standalone binaries from the project's github to which I linked. Note that yt-dlp is a youtube-dl fork based on the now inactive youtube-dlc. I started off using those older projects and quickly realized that yt-dlp is far more performant. Note that yt-dlp is a highly configurable tool with over a hundred options; for brevity I'll only be mentioning a few.

Step 1: first you'll need to decide if you only want to save the videos or if you ALSO want to transcribe their audio into text files. If you want to skip the transcription steps then exclude the "--extract-audio --audio-format mp3" parameters from whichever command you decide to run.

Step 2: get a list of all your YouTube video URLs you want to back up. You'll probably just be manually copying all of the URLs into a text file. Or, if you're OCD like myself and already have a web page with all of the links on it, you can use the following command to scrape them all:

curl -f -L -s https://url-to-page-with-links | grep -Eo 'https?://\S+outu[^"]+' | xargs -L 1 -I thisurl yt-dlp -k --write-auto-sub --extract-audio --audio-format mp3 thisurl

Otherwise, just feed your text file that contains one URL per line in it to yt-dlp:

cat your-file-of-urls.txt | xargs -L 1 -I thisurl yt-dlp -k --write-auto-sub --extract-audio --audio-format mp3 thisurl

WARNING: if any of the URLs are "youtube playlist" URLs, it will download every single video on that playlist. You can pass "--flat-playlist " to yt-dlp prevent that. I'd also suggest using "--restrict-filenames" to prevent weird stuff like emojis showing up in your file names, which can cause issues with later processing steps.

Alternatively, if you have (or create) a YouTube playlist for which you want to grab all the videos listed on it just use:

yt-dlp -k --write-auto-sub --extract-audio --audio-format mp3 --yes-playlist <playlist-url>

Step 3: wait. This may take a very long time to run depending upon how many videos you have and their total length. If it looks like YouTube is throttling your download speed, you can work around that by running multiple yt-dlp jobs in parallel by tweaking xargs parameters:

cat your-file-of-urls.txt | xargs -P 10 -n 1 -L 1 -I thisurl yt-dlp -k --write-auto-sub --extract-audio --audio-format mp3 thisurl

Note that the "-P 10 -n 1" parameters are what really speeds it up and tells it to run 10 processes in parallel.

Potential Problems

Unfortunately, YouTube doesn't take kindly to having a single IP address eat up far more bandwidth than it should for simple streaming. If you're lazy or patient then you can just run the command in serial without the "-P 10 -n 1" arguments. If you're in more of a hurry, I recommend using a VPN so that you can easily change IP addresses if they ban you. From my testing YouTube starts returning 403 (forbidden) errors after about 20 GB is downloaded in a single day. The nice thing is that the yt-dlp command will gracefully resume partial downloads, so it's not a big deal to switch IPs and re-run the original batch command.

Preserving Your Backups

If you don't care about transcribing your videos, now you're almost done! The last step will be for you to decide how to back up your archive of video files so that you're confident they won't be lost if they are removed from YouTube. Of course there are innumerable ways to do backups; I'd suggest checking out my guide for creating secure cloud backups here:

Transcribing in Bulk

If you're lucky, you'll also be able to grab subtitle files for the videos by passing the "--write-subs" parameter to yt-dlp. If a video doesn't have any subtitles, you'll want to feed the mp3 through Whisper to transcribe it. In my case, I'm going to use Whisper for everything because I suspect it will result in a more accurate transcription. My recent Whisper testing of performance and accuracy post can be found here:

As my previous article noted, it's highly recommended to run Whisper on a CUDA compatible GPU - it will be ~50X faster than just using your CPU. I ran my initial tests for the previous article on a GPU focused VPS running Ubuntu and found it was pretty easy to get set up with the appropriate drivers. This time around I decided to cheap out and use a Windows gaming machine I had on hand. I'll note that it was far more difficult for me to get Whisper working on Windows with CUDA - it doesn't seem to be as well supported. I ended up having to manually downgrade my python version and manually change the version of the python torch library, which took me several hours of internet sleuthing to figure out was the solution to my problems.

Eventually I found that my Nvidia GeForce RTX 2080Ti is able to transcribe over 5 hours of audio in 1 hour on the medium quality model. Thus I was able to transcribe my 100+ hours of content in about a day.

All you need to do is gather your audio files (mp3 / m4a / wav) and put them all in the same directory. Then with one batch command we can iterate over them with Whisper.

Bulk Windows (powershell) processing:

Get-ChildItem -Recurse -Include ".mp3",".m4a","*.wav" D:\path\to\audio\files\ | % {$outfile = Join-Path $.DirectoryName ($.BaseName + '.txt')& whisper.exe --model medium --language English --device cuda $_.FullName > $outfile}

Bulk Linux (bash) processing:

find /path/to/audio/files -type f | egrep "[.]mp3|[.]m4a|[.]wav" | xargs -I % sh -c "whisper --model medium --language English --device cuda % > %.txt"

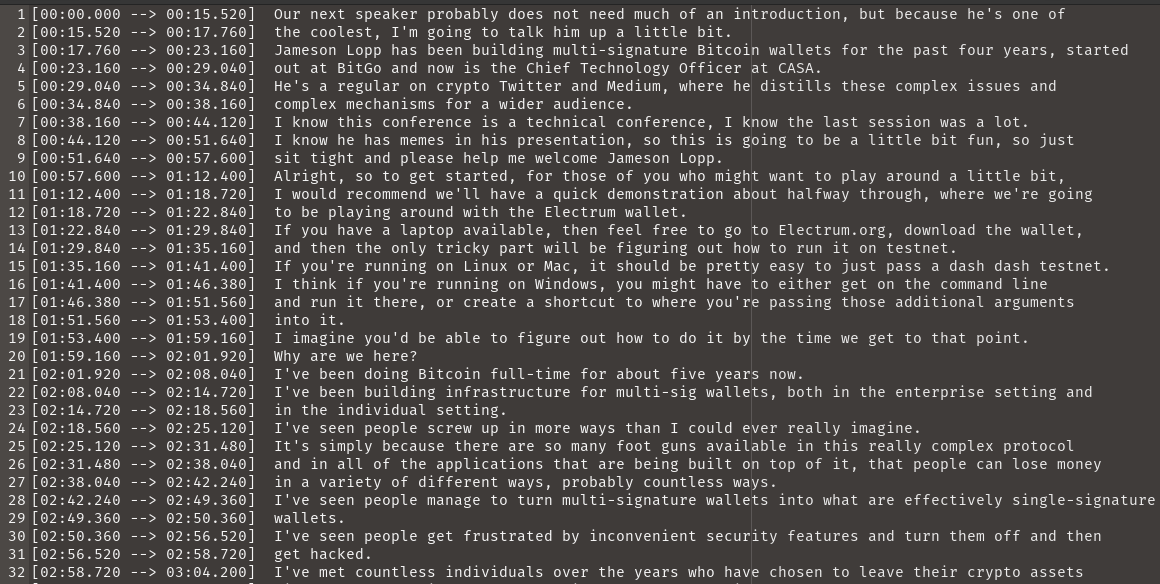

This will give us a bunch of text files with the raw output from Whisper. Unfortunately, there doesn't appear to be an option to tell Whisper to skip printing out the timestamps for each segment of transcribed text. Thankfully, they are so well-formatted that with a few more commands we can strip out the timestamps.

First, let's remove any whitespace from the file names to make the later commands simpler. You can do that in bash on Linux with this one-liner:

for f in *\ *; do mv "$f" "${f// /_}"; doneNext we need to strip an extraneous 2 bytes off the beginning of each file. I'm not sure why Whisper is outputting those bytes... maybe it was because I ran mine on a Windows machine and then am doing the post-processing on a Linux machine. We need 2 commands to do this because "tail" doesn't support in-place file rewriting.

find . -name "*.txt" -type f -exec sh -c 'f={}; tail -c +3 $f > $f.tmp' \;

find . -name "*.tmp" -type f -exec sh -c 'f={}; mv $f ${f%.*}' \;Now we're ready to actually strip out the timestamp prefixes from each line, which the following command will accomplish:

find . -name "*.txt" -type f -print0 | xargs -0 sed -i -r 's/^.+]....//g'One thing that I found quite helpful is that it appears Whisper is (sometimes) able to differentiate between different voices and it separates their text into different paragraphs. This saved me a ton of time to make my interviews more transcript-like.

After this point I just did some light editing of the transcripts, mostly doing find and replace on people's names, which Whisper can have a hard time with.

Finis

That's all there is to it! Hopefully the time I spent figuring out all of the above commands will save some of you a lot of effort in following my footsteps.

If you have any issues following this guide, or happen to be a Windows powershell expert who can contribute powershell versions of my bash commands, don't hesitate to contact me!